This has been in many ways a transitional year for the Notre Dame CSE department. With the elimination of Fundamentals of Computing 2 and the shifting of Data Structures from junior to sophomore year, core CS concepts are being taught earlier mainly in order to align with industry demands. However, the department has also experienced some growing pains (or “scalability problems” if you prefer buzzwords) since the enrollment has more than doubled in the past 2 years. Meanwhile, there has been a growing contingent of people in industry claiming that a college degree is wholly unnecessary to work in tech in 2017. At the heart of the issue is the disconnect between a fast-moving ever-changing industry and the mostly static structure of academic institutions. How can a degree program with years of baggage from academic traditions and norms hope to compete with an industry that changes by the month?

ACM, the Association for Computing Machinery, and ABET, the organization that certifies/accredits collegiate engineering programs both have their own guidelines (ACM, ABET) for designing an engineering curriculum. While the guidelines arguably have some merits, there is a more fundamental problem than the exact text of the recommendations. It’s the difference between Waterfall and Agile: academic institutions have to be more concerned with making sure their CS department aligns with the exact accreditation requirements than with keeping their CS program on the “bleeding edge” of the industry. When departments have to constantly operate at the pace of the accreditation board, waiting for them to issue changes to the curriculum on a 2-3 year cycle, they lose some of the ability to pilot new and innovative changes to the program. Notre Dame, to its credit, does a very good job of aligning to the ABET criteria, but we still ended up in a situation this year where we realized our curriculum had begun to lag behind the industry and our Peer Institutions™, and suddenly had to make drastic changes to keep up. Wouldn’t it be better if we instead made a number of small iterative changes every year instead of a massive overhaul every few years?

Having TA’d for the new intro course sequence (Fundamentals -> Data Structures) in this year of change, I naturally started thinking about the curriculum, and wondering if we could start from scratch, what my “ideal” CS program would look like. After thinking about this for much of the year, I came up with some (very) rough design goals (numbered below) and specific changes (bulleted) that I think would benefit our CS program.

- Strong engineering background. Students should be familiar with engineering process, tools and lingo. Students should be able to communicate highly technical ideas to both technical and non-technical audiences, in both writing and speech.

- Teach LaTeX in EG101 for technical writing. Focus more on presentation skills and technical/research writing.

- Streamline the curriculum and remove redundancy. Teach critical topics earlier.

- Move Fundamentals 1 to spring semester of freshman year

- Teach in Python

- Focus on high-level concepts of programming, plus basic OOP

- Combine the old Fundamentals 2 with Systems Programming

- Fall semester sophomore year

- C/C++

- Unix methodology

- Shell scripting

- Combine Computer Architecture and Logic Design

- von-Neumann concepts from Logic Design

- Teach x86 rather than MIPS

- how do compilers work?

- Data Structures

- Spring of sophomore year

- Dive deep into OOP

- Abstract and concrete data structures

- Basic algorithms

- Algorithms

- Move to fall of Junior year

- Require for both CPEG and CSE

- Move Fundamentals 1 to spring semester of freshman year

- Maximum flexibility in specialization. Not everyone wants to be a software engineer. Including a lot of space for CSE electives allows students to choose (with the help of their adviser) a sequence of electives that suits their career goals.

- Since the “core” computer science curriculum is mostly finished by the middle of junior year, many CSE elective spots open up.

- Expand selection of concentrations available, encourage students to work with advisers to build their own concentration/elective sequence to achieve their career goals

- Allow students to explore different options for career paths

- Maintain Notre Dame’s “holistic education.” The curriculum should be designed to take advantage the value of liberal arts classes to a technical education. Many liberal arts classes are directly relevant in a technical context (philosophical logic, computer ethics, etc), but also allow the student to explore creative outlets that complement the technical education. In some ways, computer science is like art, requiring a great deal of creativity and ability to find meaning in abstractions.

- Encourage students to think about ethical implications of research in specific elective classes they take

- Encourage students to use liberal arts classes to complement their technical education

- Focus on building a portfolio rather than a resume. The student should have a set of robust, long-term and well-documented projects that serve not only to build a professional reputation, but also to encourage students to think creatively, both in identifying problems and designing solutions. “A resume says nothing of a programmer’s ability. Every computer science major should build a portfolio.” (source)

- Add a yearlong senior design project for both CS and CPEG. Students work with faculty advisers to take a project from a problem statement, to an idea, to development, documentation and codebase maintenance. Encourage students with complementary skillsets (from their concentration paths) to work together

- Encourage elective classes to include projects that can be added to a student’s portfolio. Such projects should be cleanly written and well documented–something that can be shown to an employer.

I realize I’ve digressed somewhat from the original point of the blog post, and I just want to take a moment to say that despite all my criticism, I do really like the Notre Dame CS curriculum. It isn’t perfect, but it works, and it works quite well. I’m only temporarily invoking my graduating senior/old man complaining rights (“I could do that better!”) because I really enjoyed my time here and I want our department to continue to be successful.

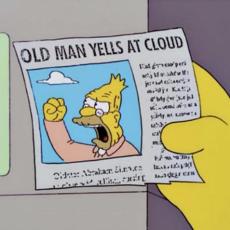

Pictured: Nick Aiello writing this blog post, 2017

Pictured: Nick Aiello writing this blog post, 2017

Despite what silicon valley says, I think there are still benefits to an undergrad CS education. Many of the arguments to the contrary seem to hinge on a faulty equivalence between programming and computer science. Computer Science != Programming. Programming is certainly an important part of computer science, but it’s only part of the picture. You can certainly learn to be a good programmer without a college education. Programming is just a tool used to express your ideas. An artist might express an idea in paint, photograph or pencil sketch, (just like a programmer might use Java or C++) but the idea itself is independent from the medium. A bachelor’s degree program provides a four-year span of time to hone that skill: not only being able to express an idea in code, but creating and refining an idea in the first place. It’s also a risk-free environment to explore career options without the overhead of switching jobs, and explore new and innovative project ideas that might be too risky for industry. I don’t regret choosing to get a college degree in CS, even if I could’ve possibly gotten the same job from a coding bootcamp, because “getting the job” isn’t really the important part: it’s about the experience.